# What is Conform?

Conform is a toolset for trace-based testing and verification of cyber-physical systems.

Auxon's SpeQTr language lets you easily write sophisticated specifications of how your system should behave, building a test suite or directly encoding system requirements. With Conform, you first record system execution using Modality (opens new window) and then evaluate specifications against the recorded execution. This means exercising your system is separated from verifying your system, simplifying each part. You can keep different versions of tests over time, share results across teams, recall past results, and easily evaluate tests against historical data to determine when a problem was introduced. When you encounter a failure, Conform can tell you exactly which part of the specification was violated, putting you well on your way to resolving the issue.

# Executing Specs

Conform is all about executable specifications, written in the SpeQTr language. You write your specs and give them to Conform where they are optionally stored for reference and version tracking. Then, you ask Conform to execute them over some traces stored in Modality.

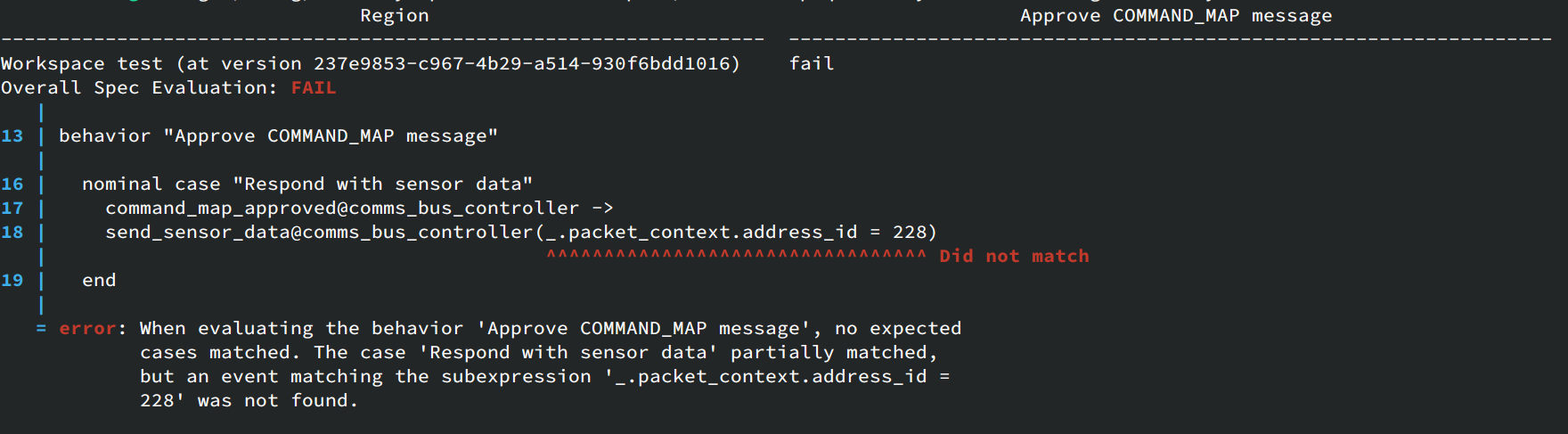

Conform then analyzes the traces and checks if the captured data is consistent with the behaviors described in your spec. If it's not, Conform will tell you both what part of the execution failed to pass the spec, and also what part of the spec failed. It can often narrow this down to a specific part of the spec, dramatically reducing the time required to diagnose system-level test failures.

# Authoring Specs

To help support writing SpeQTr specs, Conform comes with a Visual Studio Code plugin (and a language server implementation) to provide rapid feedback for syntax errors, plus autocompletion based on already collected trace data. This significantly streamlines "backwards" specification writing, which is very common in practice.